I believe that Valve is no stranger to those who have played CS. He launched a new SteamVR beta last year to respond to Oculus' "asynchronous time warping" technology. Speaking of this, many friends may be unfamiliar with Oculus' ATW asynchronous time warping technology, but if you often listen to the speech of VR startups with some technical flows in China, then you are certainly not familiar with the term "asynchronous time warping". For example, claiming to be the first fireworks workshop in China to master ATW technology, CTO Wang Mingyang mentioned this technology at their first press conference. At that time he tried to explain, but then gave up and said only "you know that this is very good." Since then, CEO Dianchi has also mentioned ATW on various occasions, including the WeChat circle of friends. Another company is also happy.

Fireworks workshop CEO Dianchi and music CEO Chen Chaoyang's circle of friends

Where is ATW?Asynchronous time warp English is Asynchronous TImewarp, referred to as ATW. Simply put, this is a technique for generating intermediate frames. When the game screen cannot maintain a sufficient frame rate, it can generate intermediate frames to compensate, thereby maintaining a high picture refresh rate. John Carmack, the proponent of this technology, is currently the CTO of Oculus.

In the interview, Wang Mingyang explained ATW, he said:

Under normal circumstances, most of our mobile phones are refreshed at 60hz, which means that in the ideal case, our mobile phone handles 60 frames/second. Then, from data to rendering, there is a delay of 1000 / 60 ~ = 16.6666ms.

So how do you offset this delay? John Carmack proposed a method to obtain the rotation and position of your head after 16.66ms by collecting a large amount of gyroscope data, and predicting the data according to this predicted data. He manages this technology called TImewarp.

However, the problem is coming again. The general VR scene is very complicated. It is difficult to guarantee that each time we finish rendering in 16.66ms, that is, we can hardly guarantee that each application is 60fps. Then the card god proposed an ATW, which is asynchronous TImewarp.

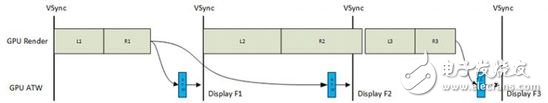

He designed the rendering pipeline of the VR application into two threads, the rendering thread (producer) and the TImewarp thread (consumer). The two work asynchronously, the producer produces enough Framebuffer, and the consumer makes the production of the product as Timewarp. Then posted on the screen. In other words, regardless of the fps of your current game, Timewarp is always designed to stay at 60fps (depending on the refresh rate).

This is the core detail of ATW, separating Timewarp from the generated Framebuffer and using the high refresh rate Timewarp for low latency.

The pioneer of ATW technology in VR is Oculus. They applied the technology on Gear VR more than two years ago, until March 25 last year announced the addition of ATW support on the PC-side SDK.

How to do it?

Image source: Oculus blog (the same below)

Oculus gave a detailed explanation of ATW in the blog. As shown in the figure above, the GPU renders the left and right eyes separately, and then inserts an ATW process before the screen is displayed. In the processing of the left frame, the screen rendering is completed in time, and the direct display is performed at this time; the second frame rendering in the middle is not completed in time, and if there is nothing to do, the screen jitter will appear, and with ATW, It will recall the previous frame and redisplay it, plus the helmet movement changes to maintain the frame rate.

Is this technology difficult?According to Wang Mingyang, the ATW technology is very simple, and the core code is only 5 lines. But if you want to achieve the best results, you need the following:

1. The GPU must support GPU preemption. This is fine, most mobile GPUs are supported, but most of the desktop GPUs are not supported;

2. The system preferably supports main surface writes. This has a certain relationship with the GPU, but it has a relatively large relationship with the operating system;

3. The GPU must have high performance, which is well understood, and the Timewarp thread actually adds to the rendering burden.

Oculus first implemented ATW on Gear VR for the first point above. They brought ATW to the PC platform, with Microsoft, NVIDIA and AMD support. NVIDIA has developed VRWorks and AMD has Liquid VR.

Among domestic manufacturers, Musician Chen Chaoyang said that ARM provides Front Buffer and Context Priority support for Big VR to implement ATW on mobile VR.

Fireworks workshops without custom hardware have modified some of the implementations of Timewarp on the basis of Oculus. "We will monitor the working condition of the Timewarp thread in the rendering thread, dynamically balance the GPU load, and ensure that the Timewarp thread works at a relatively high frame rate." Wang Mingyang said.

Motion Control Sensor is an original part that converts the change of non-electricity (such as speed, pressure) into electric quantity. According to the converted non-electricity, it can be divided into pressure sensor, speed sensor, temperature sensor, etc. It is a measurement, control instrument and Parts and accessories of equipment.

Incremental Rotary Encoder,Incremental Optical Encoder,Incremental Shaft Encoder,Absolute And Incremental Encoder

Changchun Guangxing Sensing Technology Co.LTD , https://www.gx-encoder.com