Many people feel frustrated when technology doesn’t work as expected or goes against human intuition. It’s even more unsettling when the same technology can influence our emotions and shift our moods. However, this is changing rapidly. Computers are now becoming increasingly capable of recognizing human emotions — a field that is growing into a major industry.

According to a recent report, the global emotional computing market is projected to grow from $12.2 billion in 2016 to $53.98 billion by 2021. Market research firm MarketsandMarkets highlights that performance technology is being widely adopted across various industries, with a rising demand for software that extracts facial features. This trend shows how deeply emotional intelligence is being integrated into digital systems.

Emotional computing, also known as artificial emotional intelligence, may still be unfamiliar to many, but it has already found numerous applications in research. For instance, Professor Toshihiko Yamasaki from Tokyo University is developing a machine learning system to evaluate the quality of TED talks. His goal is to train the system to watch a speech and predict how an audience might react emotionally. While this may seem abstract, the project demonstrates the potential of AI to understand human sentiment.

Yamasaki and his team analyzed over 1,646 TED videos, focusing on multimodal features like language and sound. Their results were impressive: using sentiment analysis, their method achieved an average accuracy of 93.3% in identifying emotional significance. This suggests that machines can indeed assess whether a speaker connects emotionally with an audience.

Beyond public speaking, emotional computing is finding practical uses in education. Researchers at North Carolina State University have developed software that predicts student engagement by analyzing facial expressions. By tracking movements such as raised eyebrows or mouth expressions, the system can determine if a student is attentive, confused, or engaged. The researchers believe this could revolutionize educational data mining.

In the private sector, companies like AffecTIva are leading the way in emotion recognition. Based in Boston, the company offers software that measures facial expressions through cameras. According to their chief marketing officer, Gabi Zijderveld, their technology can analyze real-time video or recorded footage to provide feedback on emotional responses. This has significant implications for advertising, where companies can gauge consumer reactions to ads and make adjustments accordingly.

AffecTIva’s core technology is also being integrated into other industries, including gaming, education, robotics, and healthcare. Even in human resources, their software is being used to analyze interviewees’ emotional responses during online recruitment. This helps companies make more informed hiring decisions based on emotional cues.

Richard Yonck, founder of Future Intelligence Consulting, believes that the "emotional economy" will expand rapidly, creating an ecosystem of emotionally aware systems. He predicts that these technologies will not only improve efficiency but also reshape how we interact with machines on both personal and commercial levels.

AffecTIva has built one of the largest emotional databases, having analyzed over 4.7 million faces across 75 countries. Zijderveld emphasizes that the data is collected with consent, ensuring ethical use. This vast dataset is crucial for training deep learning models, as it helps avoid biases that come from limited or unrepresentative samples.

The data also reveals cultural differences in emotional expression. For example, women tend to smile more and for longer periods than men. Regional variations also play a role, showing how emotions are expressed differently around the world.

As emotional computing continues to evolve, it’s clear that it will drive new forms of innovation. Yonck believes that just like early computer technology, these systems will lead to increasingly complex and intelligent products.

Soon, people will have more direct interactions with emotional AI. For example, Hubble Connected’s Hugo smart camera uses Affectiva’s emotional AI to recognize users, monitor home environments, and act as a photographer. Described as “a friend at home,†it represents the next step in human-machine interaction.

Despite its potential, Yonck warns that AI development must be guided by ethical considerations. As we build systems that understand human emotions, we must ensure they align with human values and avoid unintended consequences. The future of emotional computing holds great promise — but it also requires careful oversight.

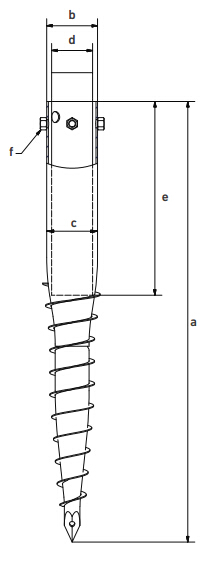

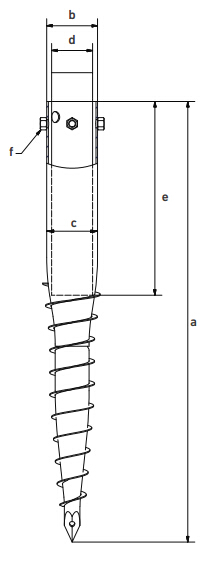

N Series Ground Screw

N series Ground Screw/Ground Screw Pile,ground Spike,ground screw pile foundation ,screw pile :Threaded Bolts fasten, No Flanges,one ,three or four bolts fixed .Including not galvanized ground screw,hot dip galvanized ground screw.

Available in the following diameters and length :

Diameter :68 mm,76 mm,89 mm,114 mm ,140mm,219mm

Length of N Ground Screw pile (mm) : 800,1000,1200,1500,1800,2000,2500,3000,5000 and requested length

Nut aperture for N series ground screw : 3-M 10/12/14/16

Surface treatment : Hot Dip Galvanized ,DIN EN ISO 1461-1999 ,average thickness of coating more than 80 micron

Range of Application : Solar power system,fence, timber construction ,farm and garden fence ,umbrella basement ,advertising board and banner ,lighting pole &

Production charting : Cutting pipe to he standard length ,forming the pipe according to design ,welding and assemble blade,flange,nut , acid pickling ,hot dip galvanize ,QC, Packing ,shipments

Helix Ground Screw,Helical Ground Screw,Solar Mounting Ground Screw,Galvanized Steel Ground Screw,Helical Screw Piles

BAODING JIMAOTONG IMPORT AND EXPORT CO., LTD , https://www.chinagroundscrew.com